What “Project Distress” Means (and Why It Matters Early)

Project distress is a condition where a project’s ability to meet its intended outcomes (scope, schedule, cost, quality, and stakeholder value) is degrading in a way that is likely to compound without intervention. Distress is not the same as “a project having problems.” All projects have issues; distress is when the pattern of issues indicates loss of control, loss of predictability, or loss of trust.

A useful way to think about distress is as a shift from manageable variance to systemic variance:

- Manageable variance: deviations are visible, bounded, and correctable with routine actions (re-planning a sprint, adding a reviewer, clarifying a requirement).

- Systemic variance: deviations are recurring, cross-cutting, and resistant to routine fixes (rework keeps rising, dates keep slipping despite “catch-up” plans, stakeholders stop believing status reports).

Early recognition matters because most turnarounds fail when teams wait for “proof” that the project is in trouble. By the time proof is undeniable, options are fewer: budgets are committed, stakeholders are polarized, and technical debt or contractual constraints limit maneuvering. The goal is to detect distress while it is still reversible.

Distress vs. Normal Delivery Noise

To avoid overreacting, distinguish between normal delivery noise and distress signals. Normal noise is typically isolated and explainable; distress signals are patterned and predictive.

Normal noise characteristics

- One-off misses with a clear root cause and a credible corrective action.

- Variance stays within agreed tolerances (for example, a milestone slips by a few days but downstream work is not destabilized).

- Stakeholders remain aligned on priorities and trade-offs.

- Quality metrics remain stable (defects, incidents, rework do not trend upward).

Distress characteristics

- Repeated misses that require repeated “exceptions” or “heroic efforts.”

- Plans are frequently rewritten, but outcomes do not improve.

- Decision-making slows or becomes political; approvals become harder to obtain.

- Quality degrades or becomes unknown because measurement is avoided.

When you see distress characteristics, treat them as leading indicators. You do not need to wait for a major failure event.

- Listen to the audio with the screen off.

- Earn a certificate upon completion.

- Over 5000 courses for you to explore!

Download the app

Early Warning Signals: A Practical Taxonomy

Early warning signals are observable cues that the project system is drifting toward failure. They appear in multiple domains. A single signal may not mean distress; clusters across domains usually do.

1) Schedule and planning signals

- Chronic milestone slippage: dates move repeatedly, often by small increments (“two more weeks”) that accumulate.

- High plan churn: frequent re-baselining without a corresponding change in scope or strategy.

- Work expands to fill time: tasks remain “in progress” for long periods; completion criteria are vague.

- Critical path ambiguity: no one can clearly state what must happen next to protect the finish date.

- Dependency surprises: external teams or vendors are “suddenly” blocking progress, indicating weak dependency management.

2) Scope and requirements signals

- Uncontrolled scope growth: new requests enter without trade-offs, or “must-have” lists expand.

- High rework due to unclear requirements: features are built, reviewed, and then reinterpreted.

- Acceptance criteria missing or shifting: teams cannot define “done” in testable terms.

- Backlog inflation: the backlog grows faster than throughput, even after prioritization meetings.

3) Cost and resource signals

- Burn rate exceeds value delivered: spending continues while deliverables remain intangible or repeatedly deferred.

- Hidden overtime: sustained after-hours work becomes normal, often untracked or minimized in reports.

- Key-person dependency: progress depends on one or two individuals; absence causes stalls.

- High turnover or role churn: frequent changes in product owner, project manager, tech lead, or vendor contacts.

4) Quality and technical signals

- Defect trend rising: defects found late increase, or production incidents spike.

- Testing squeezed: test cycles shorten to “protect the date,” increasing downstream risk.

- Integration pain: components work in isolation but fail when combined; integration becomes a recurring crisis.

- Technical debt acceleration: shortcuts become policy; “we’ll fix it later” becomes the default.

- Environment instability: build pipelines, test environments, or data sets are unreliable, slowing feedback loops.

5) Stakeholder and governance signals

- Status becomes performative: reports emphasize effort and activity rather than outcomes and evidence.

- Escalations increase: more issues require senior intervention, indicating local decision-making breakdown.

- Conflicting success definitions: different stakeholders measure success differently (speed vs. compliance vs. feature completeness).

- Approval bottlenecks: decisions stall in committees; risk aversion rises.

- Trust erosion: stakeholders ask for more detail, more meetings, more proof—often a response to surprises.

6) Team dynamics and execution signals

- Blame patterns: “them vs. us” language between teams (engineering vs. QA, business vs. IT, client vs. vendor).

- Meeting overload: coordination time grows while delivery time shrinks.

- Low psychological safety: people stop raising risks; bad news arrives late.

- Work avoidance: hard tasks are deferred; easy tasks are completed to show progress.

- Inconsistent working agreements: no shared definition of done, no stable cadence, or frequent exceptions.

Defining Project Distress with Thresholds (So It’s Not Just a Feeling)

To act early, define distress using thresholds that convert signals into a decision. Thresholds should be simple enough to apply quickly and consistent enough to compare across time.

A three-level model: Watch, Concern, Distress

Use three levels to avoid binary thinking:

- Watch: early signals appear, but impact is limited and corrective actions are working.

- Concern: multiple signals cluster; predictability is declining; corrective actions are not producing improvement.

- Distress: the project cannot reliably forecast outcomes; trust is eroding; risks are compounding faster than they are being reduced.

Example threshold set (adapt to your context)

- Schedule: two consecutive reporting cycles with milestone slippage, or forecast confidence below an agreed level.

- Scope: more than a defined percentage of work enters “in progress” without acceptance criteria, or rework exceeds a set portion of capacity.

- Quality: defect leakage increases over two cycles, or test coverage/automation drops below a minimum.

- Stakeholders: repeated escalations, or stakeholders request parallel reporting because they distrust the main report.

- Team: sustained overtime for more than a set period, or key roles change more than once in a quarter.

The exact numbers depend on your domain. The key is to define them before the crisis and to apply them consistently.

A Step-by-Step Method to Recognize Early Warning Signals

The following method is designed for rapid, repeatable detection. It can be run in 60–120 minutes weekly, or as a focused 2–3 hour workshop when you suspect trouble.

Step 1: Establish the “evidence set” (what you will look at)

Collect a small set of artifacts that reveal reality, not intentions. Keep it lightweight and consistent.

- Latest plan or milestone map (even if informal).

- Delivery board/backlog snapshot (items in progress, blocked, done).

- Defect/incident summary (opened vs. closed, severity, age).

- Change requests or scope decisions made in the last period.

- Risks/issues log (or equivalent), including age and owner.

- Stakeholder feedback (emails, meeting notes, escalations).

If these artifacts do not exist or are unreliable, that itself is a distress signal: the project lacks observability.

Step 2: Run a “signal scan” across domains

Use a structured checklist and mark each signal as: not present, present, or unknown. “Unknown” is important; unknowns often hide the biggest risks.

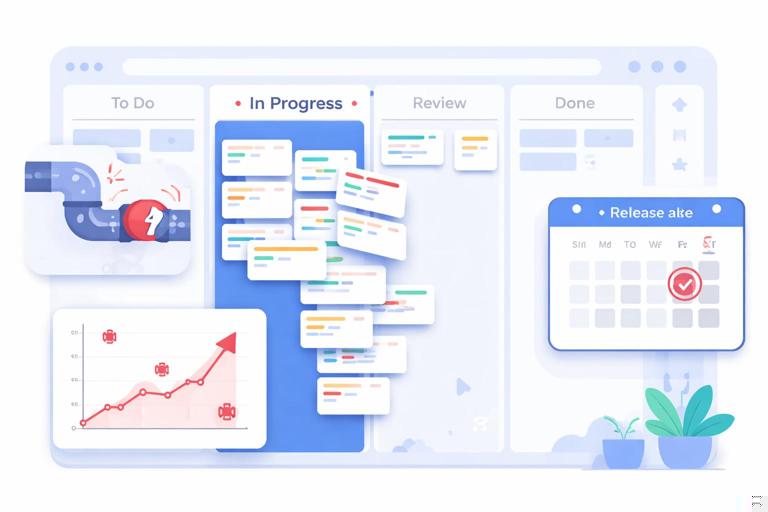

Signal Scan (example fields) Schedule: slippage? plan churn? dependency surprises? Scope: unclear acceptance? rework? backlog inflation? Cost/Resources: overtime? turnover? key-person dependency? Quality/Tech: defect trend? integration pain? environment instability? Stakeholders: trust erosion? escalations? conflicting success definitions? Team: blame? meeting overload? risk silence?Do this scan with at least two perspectives (for example, delivery lead and product lead). Misalignment between perspectives is itself a signal.

Step 3: Identify clusters and patterns (not isolated events)

Look for combinations that commonly indicate distress:

- Schedule slip + testing squeezed + defect trend rising suggests a quality spiral.

- Scope growth + unclear acceptance criteria + rework suggests requirements instability.

- Plan churn + dependency surprises + approval bottlenecks suggests governance friction.

- Overtime + key-person dependency + environment instability suggests fragile execution capacity.

Patterns are more predictive than any single metric.

Step 4: Convert patterns into a distress level

Apply your thresholds. If you do not have thresholds yet, use a simple scoring approach to start:

- Assign 0 for not present, 1 for present, 2 for unknown (unknown is risk).

- Sum by domain and overall.

- Define provisional cutoffs (for example, overall score above a certain level indicates Concern; above a higher level indicates Distress).

The goal is not mathematical precision; it is consistent decision-making.

Step 5: Write a one-paragraph distress definition for this project

Distress should be described in plain language tied to evidence. This prevents vague labels and helps align stakeholders.

Template:

This project is in [Watch/Concern/Distress] because [2–4 evidence-based signals]. If unchanged, the most likely outcomes are [1–2 impacts]. The immediate uncertainty we must resolve is [key unknown].Example:

This project is in Concern because the release date has slipped in two consecutive cycles, rework is consuming roughly a third of capacity due to shifting acceptance criteria, and defect counts are rising while testing time is being reduced. If unchanged, we will miss the regulatory deadline or ship with unacceptable defect risk. The immediate uncertainty we must resolve is whether the current scope is feasible with the available integration environment and vendor dependency dates.Step 6: Validate with a “triangulation” conversation

Run a short validation with three groups: delivery team, business/stakeholders, and any external dependency owners (vendors, platform teams). Ask the same three questions:

- What is the most important thing that could cause us to miss the outcome?

- What evidence do you have that this risk is increasing or decreasing?

- What decision are we avoiding?

When answers diverge sharply, you have uncovered a distress driver: misaligned reality.

Common Distress Archetypes (So You Can Recognize the Shape of Trouble)

Distressed projects often fall into recognizable archetypes. Naming the archetype helps teams stop treating symptoms and start addressing the underlying mechanism.

The “Date-Driven Mirage”

- Signals: optimistic forecasts, repeated “almost done,” testing compressed, late surprises.

- Mechanism: commitments are made without evidence; progress is measured by activity, not completed value.

- What to look for: large amount of work in progress, unclear definition of done, weak integration/testing evidence.

The “Scope Hydra”

- Signals: requirements churn, expanding must-haves, rework, stakeholder disagreements.

- Mechanism: prioritization is not binding; trade-offs are avoided.

- What to look for: no stable acceptance criteria, frequent reinterpretation after demos, backlog growth.

The “Dependency Trap”

- Signals: blockers attributed to other teams, vendor delays, integration failures, waiting time.

- Mechanism: critical dependencies are unmanaged or discovered late.

- What to look for: missing dependency map, unclear owners, no contingency plans.

The “Quality Debt Spiral”

- Signals: rising defects, unstable environments, repeated hotfixes, slower delivery despite more effort.

- Mechanism: shortcuts reduce feedback quality; rework grows; throughput declines.

- What to look for: increasing cycle time, more time spent fixing than building, fragile build/test pipeline.

The “Governance Gridlock”

- Signals: slow decisions, many steering meetings, unclear authority, escalations.

- Mechanism: accountability is diffused; risk is managed through process rather than decisions.

- What to look for: unresolved decisions aging, conflicting stakeholder success criteria, repeated re-approval.

Practical Examples: Turning Signals into a Distress Definition

Example 1: Software product release

Observed signals: 40% of stories are “in progress” for more than two weeks; integration environment is down twice per week; defects opened exceed defects closed for three weeks; release date unchanged in reporting despite slippage.

Distress definition: The project is in Distress because delivery flow is stalled (high work-in-progress age), integration is unreliable, and defect trends indicate quality is deteriorating while reporting remains optimistic. The likely outcome is a late or unstable release. The key unknown is whether the integration environment can be stabilized quickly enough to restore a reliable feedback loop.

Example 2: Construction/engineering project phase

Observed signals: repeated design revisions after stakeholder reviews; procurement lead times not confirmed; subcontractor schedule assumptions differ from the master plan; change requests are approved without schedule impact analysis.

Distress definition: The project is in Concern because design churn is driving rework, procurement dependencies are uncertain, and the schedule is not grounded in confirmed lead times. The likely outcome is downstream installation delays and cost growth. The key unknown is whether scope changes can be frozen long enough to finalize procurement and lock a feasible sequence.

Example 3: Compliance/regulatory initiative

Observed signals: unclear interpretation of requirements; multiple stakeholder groups disagree on “minimum compliant”; testing evidence is not traceable to requirements; approvals require multiple committees.

Distress definition: The project is in Distress because compliance criteria are not testable or agreed, evidence is not traceable, and governance delays prevent timely decisions. The likely outcome is failure to demonstrate compliance by the deadline. The key unknown is which interpretation will be accepted by the approving authority and how that maps to testable controls.

Building a Shared Language: Distress Indicators as “Observable Behaviors”

One reason early warning signals are missed is that teams describe them as opinions (“it feels chaotic”). Convert opinions into observable behaviors that anyone can verify.

- Instead of “planning is bad,” use “milestones have been re-baselined three times in six weeks without scope reduction.”

- Instead of “requirements are unclear,” use “more than half of items started this month lacked acceptance criteria at start.”

- Instead of “quality is suffering,” use “defects older than 14 days increased by 25% and testing time was reduced.”

- Instead of “stakeholders don’t trust us,” use “stakeholders requested a separate weekly report and escalated two items to the steering group.”

This language reduces defensiveness and makes it easier to agree on whether distress exists.

Quick Diagnostic Workshop: 90-Minute Early Warning Session

If you need a fast, structured way to recognize distress with a group, run this 90-minute session.

Agenda

- 0–10 min: State the purpose: identify early warning signals and agree on a distress level using evidence.

- 10–25 min: Review the evidence set (plan, backlog, defects, risks, changes, stakeholder notes).

- 25–45 min: Signal scan (each participant marks present/not present/unknown).

- 45–65 min: Cluster patterns and select top 3 signal clusters.

- 65–80 min: Agree on Watch/Concern/Distress and draft the one-paragraph distress definition.

- 80–90 min: Identify the top 3 unknowns to resolve next (not solutions yet), assign owners to gather evidence.

Facilitation tips

- Keep the discussion anchored to artifacts and recent events.

- Capture “unknowns” explicitly; do not let the group hand-wave them away.

- When debate becomes circular, ask: “What evidence would change your mind?”

- Separate “distress recognition” from “recovery planning” to avoid jumping ahead.